Ever wondered about the boundaries of AI and the ethics of image generation? The rapid advancement of artificial intelligence has opened doors to incredible possibilities, but it has also sparked crucial debates about its responsible use, particularly in the realm of image creation. Today, we delve into a specific area that pushes these boundaries: AI-powered "cloth removal" or "unclothing" generators.

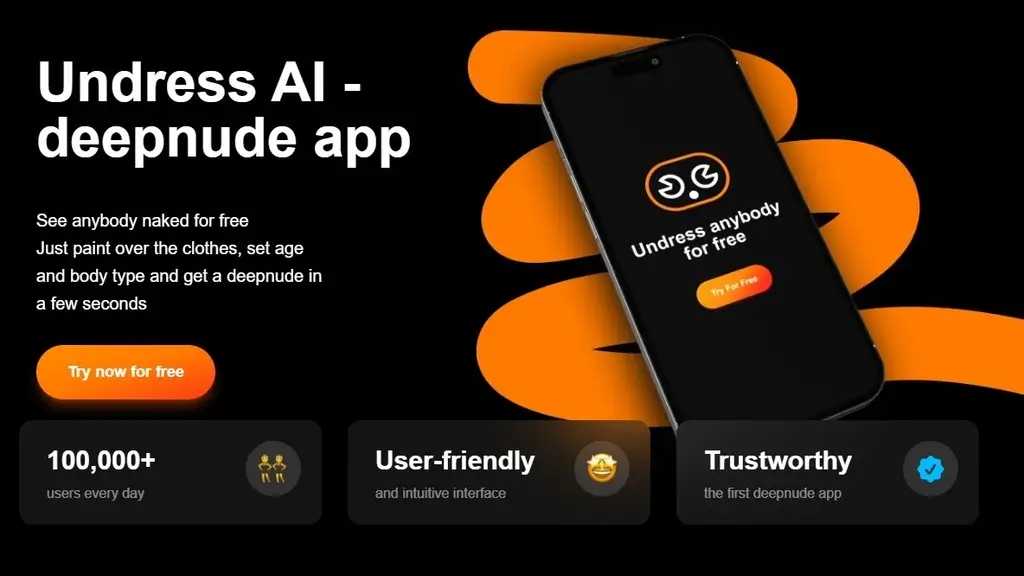

These AI tools, often advertised with phrases like "just input character descriptions like gender, body type, remove cloth, pose etc in the prompt box, and clothoff ai generator will automatically render unique images based on your prompts," promise the ability to create photorealistic images of individuals stripped of their clothing. The underlying technology leverages sophisticated algorithms, often based on deep learning models trained on vast datasets of images, to "fill in" what's presumed to be under clothing. This raises serious ethical and legal questions regarding consent, privacy, and the potential for misuse.

| Category | Details |

|---|---|

| Name | AI Image Generator |

| Purpose | Generates images based on text prompts, including those involving removal of clothing. |

| Technology | Deep learning, generative adversarial networks (GANs), image synthesis. |

| Ethical Concerns | Consent, privacy violations, non-consensual deepfakes, potential for harassment and abuse, objectification, and the spread of misinformation. |

| Legal Ramifications | Potentially violates laws related to privacy, defamation, and the creation/distribution of explicit images without consent. Laws vary by jurisdiction. |

| Data Sources | Trained on massive datasets of images scraped from the internet, raising concerns about copyright infringement and biases present in the data. |

| Accessibility | Increasingly accessible through online platforms and apps, making it easier for individuals to create and share these types of images. |

| Potential Misuse | Creating revenge porn, generating images of political opponents, bullying, and harassment, and spreading false information. |

| Mitigation Strategies | Developing ethical guidelines, implementing stricter regulations, improving detection methods, and educating users about the risks and consequences. |

| Further Reading | Electronic Frontier Foundation (EFF) |

The core problem lies in the fact that these AI systems can generate images of individuals without their explicit consent. Even if the initial image used as a base is publicly available, the act of altering it to create a sexually explicit or compromising depiction constitutes a severe breach of privacy. The ease with which these images can be created and disseminated online amplifies the potential harm, leaving victims vulnerable to harassment, humiliation, and even blackmail. Moreover, the "realistic" nature of these AI-generated images can blur the line between reality and fiction, making it difficult for viewers to discern whether the image is authentic or manipulated. This can lead to the spread of misinformation and the erosion of trust in visual media.

Consider the implications for public figures. Politicians, celebrities, and activists are already subject to intense scrutiny, and the creation of AI-generated "unclothed" images could be used to damage their reputations and undermine their credibility. But the risks extend far beyond the realm of the famous. Everyday individuals could find themselves targeted by malicious actors seeking to inflict emotional distress or financial harm. The consequences can be devastating, leading to anxiety, depression, and even suicidal ideation.

The legal landscape surrounding AI-generated content is still evolving. Many jurisdictions lack specific laws to address the unique challenges posed by these technologies. Existing laws related to privacy, defamation, and the creation and distribution of explicit images may offer some protection, but they often fall short of adequately addressing the nuances of AI-generated content. The question of liability is also complex. Who is responsible when an AI system generates a harmful image? Is it the developer of the AI model, the user who inputted the prompt, or the platform that hosts the image? These questions remain largely unanswered.

Furthermore, the use of vast datasets to train these AI models raises concerns about copyright infringement and bias. Many of these datasets are compiled by scraping images from the internet without obtaining the necessary licenses or permissions. This could expose AI developers to legal action from copyright holders. Moreover, the datasets often reflect existing societal biases, which can be amplified by the AI models. For example, if the dataset contains a disproportionate number of images of women in sexually suggestive poses, the AI model may be more likely to generate "unclothed" images of women than men, perpetuating harmful stereotypes.

Beyond the legal and ethical considerations, there are also broader societal implications to consider. The proliferation of AI-generated "unclothed" images could contribute to the objectification of women and the normalization of sexual violence. It could also erode the value of consent and undermine efforts to promote healthy relationships. The creation and distribution of these images can be seen as a form of sexual harassment and a violation of human dignity.

Addressing this complex issue requires a multi-faceted approach. First and foremost, we need to raise awareness about the risks and consequences of AI-generated "unclothed" images. Education is key to empowering individuals to protect themselves and to recognize and report instances of abuse. We also need to develop ethical guidelines for AI developers and researchers, ensuring that these technologies are used responsibly and in a way that respects human rights. These guidelines should address issues such as consent, privacy, and bias.

Stricter regulations are also necessary. Governments need to enact laws that specifically address the creation and distribution of AI-generated content, including "unclothed" images. These laws should clearly define what constitutes a violation of privacy and establish appropriate penalties for offenders. They should also address the issue of liability, clarifying who is responsible when an AI system generates a harmful image.

In addition to regulations, we need to improve detection methods. Researchers are working on developing algorithms that can identify AI-generated images and distinguish them from authentic photographs. These detection tools could be used to automatically flag and remove harmful content from online platforms. However, this is an ongoing arms race, as AI generators become more sophisticated and detection methods struggle to keep pace.

Finally, we need to promote a culture of respect and consent. This means educating individuals about the importance of respecting boundaries and obtaining consent before sharing or manipulating images of others. It also means challenging harmful stereotypes and promoting positive representations of gender and sexuality. This is a long-term effort that requires a fundamental shift in attitudes and behaviors.

The rise of AI-powered "cloth removal" generators presents a clear and present danger to individuals and society as a whole. While the technology may seem novel and intriguing, the potential for misuse is immense. We must act now to mitigate the risks and ensure that these technologies are used responsibly and ethically. Failure to do so could have devastating consequences.

The challenge is significant, demanding attention from technologists, policymakers, and the public. It is essential to foster open dialogue about the responsible development and use of AI to safeguard fundamental human rights and prevent AI from becoming a tool for exploitation and abuse. This future hinges on our collective resolve to navigate the ethical minefield with diligence and foresight.

Consider the legal ramifications of generating and distributing these images. In many jurisdictions, doing so could potentially violate laws related to privacy, defamation, and the creation or distribution of explicit images without consent. The legal landscape is still developing, but the potential for serious legal repercussions is very real. The implications extend far beyond a simple prank or joke; these actions can have severe, lasting legal consequences.

The data sources used to train these AI models also present concerns. These models are often trained on massive datasets of images scraped from the internet. This raises questions about copyright infringement and the potential for biases present in the data to be amplified by the AI. If the dataset contains biased or discriminatory content, the AI model is likely to perpetuate those biases, leading to unfair or discriminatory outcomes. Therefore, careful consideration must be given to the composition and quality of the training data.

Furthermore, the increasing accessibility of these AI tools makes it easier for individuals to create and share these types of images. As technology advances, it becomes more readily available to the general public, regardless of their intentions or ethical considerations. This widespread accessibility necessitates increased vigilance and proactive measures to prevent misuse and abuse. The power to create such images should not be taken lightly, and its potential for harm must be carefully considered.

The potential for misuse extends to creating revenge porn, generating images of political opponents, engaging in bullying and harassment, and spreading false information. These are just a few examples of the ways in which this technology can be weaponized. The consequences of such misuse can be devastating for the victims, leading to emotional distress, reputational damage, and even physical harm. It is crucial to recognize the potential for harm and take steps to prevent it.

Therefore, developing effective mitigation strategies is essential. These strategies should include establishing ethical guidelines, implementing stricter regulations, improving detection methods, and educating users about the risks and consequences of creating and sharing AI-generated "unclothed" images. A comprehensive approach is necessary to address this complex issue effectively. By working together, we can minimize the risks and harness the potential benefits of AI while protecting individuals' rights and dignity.